This is a writeup of the testing that I've been doing of using CeroWRT and an WNDR3800 to see if I can get better

effective internet performance than I can with Free.fr's own router/dsl-modem.

Hardware:

- Freebox Server v6 (

http://www.freebox-v6.fr/), fw v2.0.5

- WNDR3800 running CeroWRT 3.10.24-8

- 17" Macbook Pro

These tests are all wired. The Freebox server is 100Mbps ethernet, the WNDR3800 is gigabit (these are both orders of magnitude higher than the actual line rates (as reported by the firmware of the freebox itself):

upload: 1171 Kbps

download: 21181 Kbps

These are assumed to be "raw" line rates, the link-layer rates, not the tcp data rates. As the unit is talking to the DSLAM using ADSL2+, the achievable rates by tcp/ip are going to be less (due to the overhead of any VLANing and the overhead of ATM. I don't have good details on the exact structure of the Free.fr DSL network, but future work will include attempting to determine what that overhead is.

Test Setup

Tests are all performed using two measurement mechanisms:

- netperf (rrul, tcp_download, and tcp_upload tests)

- Ookla's speedtest.net

Speedtest.net is included because it's a ready test that anyone can do, and it's results have shown to be effected by various settings.

The target server for netperf is in Germany, about 55ms away, and is serviced with a 1Gbps link.

A note on ECN: ECN is enabled in both directions on the MacBook Pro, at the test server, and in the CeroWRT router (even though it is not a default setting).

Results

What follows is a baseline test of the Freebox, and then a series of tests while the WNDR3800 is configured per current best practices, from the guidance at the CeroWRT wiki:

http://www.bufferbloat.net/projects/cerowrt/wiki/Setting_up_AQM_for_CeroWrt_310

Freebox baseline results:

Speedtest.net: 18.07Mbps (download) / 0.99Mbps (upload), 31ms ping

These results are, frankly, quite good. And far, far better than I've had in other places. In the face of the fully saturated uplink, the median ping time was about 15ms larger, and the 90th percentile is around 25ms larger.

The TCP download throughput achieved by netperf, at ~13Mbps is quite good, and the speedtest.net results are also very good.

Due to measurement differences, they are rather different numbers, this is to be expected.

WNDR3800 running CeroWRT with default settings

Speedtest.net results: 17.18 / 0.99 Mbps, ping 32ms

At this time, I still don't understand what's happening with UDP, but whenever I setup a double-NAT situation, the netperf UDP tests all act very bizarrely. I'm not sure if that's a CeroWRT or Freebox issue.

002 - Rate limited interfaces

The first step of the tuning process is to set the "internet" side interface to either 85% of the advertised bandwidth, or 95% of the known measured bandwidth. As the freebox reports the line rate, we'll use 95% of that:

20181 kbps down

1112 kbps up

Speedtest.net results: 18.09 / 1.01 Mbps, ping: 31ms

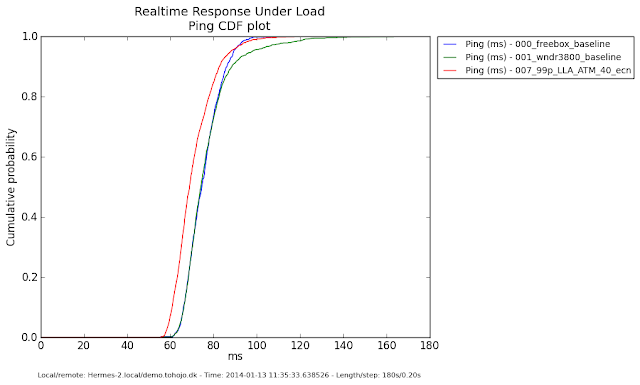

Checkpoint for comparison

Here's a graph of the ICMP ping time cdf, for the 3 tests run so far:

No gains over the freebox, and perhaps a slight loss at the slower extreme.

003 - Link-Layer Adaptation

One of the interesting problems is that when running over DSL links, which are heavily encapsulated in other protocols/technologies, the rate limiting is unable to properly calculate what the actual line rate is, and so it needs some parameters for overhead estimation.

But the simple guidance is that if using a DSL-based technology, start with the following:

link-layer adaptation: ATM

per-packet overhead: 44 bytes

This shouldn't have much, if any effect on bulk traffic, but could have a big impact on small packets (where the overhead is a greater percentage of the packet size). Except that it does.

speedtest.net results: 16.45 / 0.87 Mbps, ping 32ms

At this point, I'm fairly certain that something is wrong with the tests, due to the very uneven throughput in this run, and the fact that the UDP realtime response measurements are broken, with only ICMP working correctly.

However, that being said, enabling the link-layer adaptation has improved the latency under load, unless this is an artifact of the unusual results above.

004 - Less limited

For this set of settings, I changed the router's bandwidth limiting to be the line rates reported by the modem, relying on the link-layer adaptation to do the requisite overhead compensation. If this is incorrectly estimated overhead, then this will allow a standing queue to build up over time. And it may otherwise.

bandwidth limits: 21181 / 1171 kbps

link-layer adaptation: ATM with 44 byte overhead

speedtest.net results: 17.32 / 0.88 Mbps, ping: 32ms

The increased bandwidth came at the cost of an increase in latency, although it's still less than the Freebox's own latency.

005 - 99% of limit

Out of curiosity, I ran another test where I set the bandwidth just a hair under (99% of) the rates reported by the modem, to see if this tiny bit of headroom had a positive or negative impact (or helped add headroom to a mis-estimated overhead for the ADSL encapsulation).

bandwidth limits:

21000 kbps down

1160 kbps up

link layer adaptation: ATM with 44 byte overhead

speedtest.net results: 17.12 / 0.92 Mbps, ping 32ms

No real gain in latency vs. the 100% of line rate bandwidth limit:

The results show that some of the bandwidth is back (95% of original download performance, 92% of upload performance), and the realtime response is still under that of the base Freebox most of the time.

006 - reduced LLA overhead estimate

Given that the overhead is an estimate, let's try a slightly smaller estimate, and see if it makes any difference: 40 bytes instead of 44.

bandwith limit:

21000 kbps down

1160 kbps up

link-layer adaptation:

ATM with 40 byte overhead

speedtest.net results: 17.09 / 0.87 Mbps, ping 32ms

Contrary to my expectations, reducing the LLA overhead estimate seems to have improved response, and perhaps penalized upload performance. But upload performance is hard to measure accurately via the speedtest.net benchmark, so this may just be measurement noise.

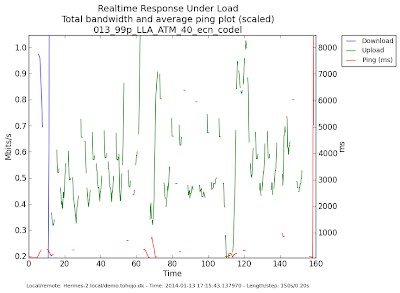

007 - no changes, just more tests

So I re-ran the tests with the same settings, and then further ran the netperf tcp download and upload tests to get an idea for how those were functioning.

Speedtest.net results: 17.11 / 0.90 Mbps, ping 32ms

And here are the dedicated tcp_download results (1 socket, with pings):

And dedicated tcp_upload results (1 socket, with pings):

Here, netperf is fairly certain that it's just below 1Mbps upload, and the added latency from this is really quite impressively low:

The above rrul test run, for context with the rest of the runs:

The 40 byte overhead estimate seems to improve realtime response back to the level it was at with a 95% bandwidth limit. As a result of this, I'm going to walk that space 4 bytes at a time (but that's a separate write-up, as this is now long enough.

Conclusions

I'm happy with these final results. Here's the final vs. where I started:

This is a few ms consistently better than the freebox's own settings, at a small sacrifice in overall bandwidth:

Freebox: 18.07 / 0.99 Mbps

Final: 17.11 / 0.90 Mbps

This is a 5% penalty in download, and a 10% penalty on upload.

However, I wish that upload penalty wasn't so large, even with day-to-day use being dominated by download and latency.