These are just some raw results, I haven't done any tuning (need to do other things today), but I finally got around to contacting Comcast support, and getting the modem switched to full bridge mode. Since it's their combo modem/AP/router/voip unit, support has to do it on the backend. But live-chat support was able to do it (and able stay operational through all the ip address changes, which was nice).

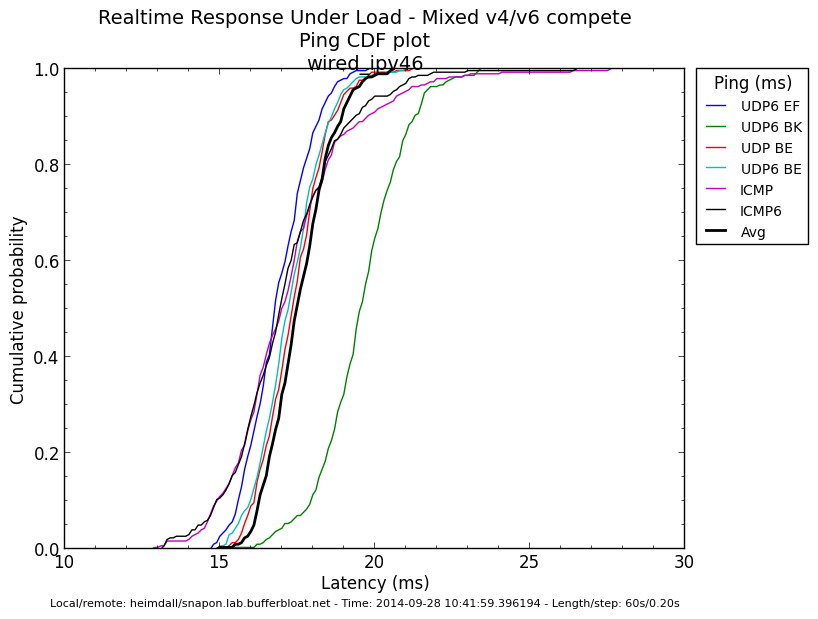

But the WNDR3800 is certainly at (past) the edge of what it can handle at these data-rates when using the simple.qos scripts at:

download (ingress): 100000

upload (egress): 12000

squash ingress DSCP

ECN enabled in both directions (but not enabled on the local end of the test)

So, here's a bunch of graphs:

Sunday, September 28, 2014

Friday, August 29, 2014

New Comcast Speeds -> New CeroWRT SQM Settings

Comcast upped their line-rates in my area recently, which meant I needed to up the settings I use with CeroWRT to harness that bandwidth with reasonable latency (vs. the unreasonable latency that the cablemodems have by default).

Our service was previously 50/10 Mbps (nominal), although in practice I saw that it was a bit above that (maybe 10% or so). Comcast just upped that to 100/10 (nominal).

Raw testing:

The raw rates (laptop plugged directly into the modem) show 120Mbps down, 10 up, and 120ms of bufferbloat latency. These are both improvements over the previous setup. I'm wondering if the modem is now running PIE... The latency is about half what it was when I set the service up back in May.

And 120Mbps is awfully nice...

But the WNDR3800 was set for slower settings:

Wonderful (lack of) latency, but 50Mbps is a lot less than 120Mbps, so time to start upping the download rate limiting and see what the unit can do:

Unfortunately, the WNDR3800 just doesn't have the processing power to pull off much more than 50Mbps (as I previously knew). Setting the download rate at 100Mbps, it can only manage about 70-75Mbps, and the graphs are noisy enough to show that the unit isn't able to correctly run the algorithms.

Final settings:

CeroWrt Toronto 3.10.36-4

fq_codel

100000 Kbps ingress limit

12000 Kbps egress limit

ECN enabled (both directions)

Addendum: I tried to disable downstream shaping, to see if that would control latency well enough on the upstream side. The results were not confidence-inspiring:

Certainly much better than with no upstream shaping, but the extra latency goes from 5ms to 15ms, and the sharing/fairness of the streams comes apart.

If only Comcast would just fix this in the CMTS and in the modems, instead of forcing us to do this on our own...

Our service was previously 50/10 Mbps (nominal), although in practice I saw that it was a bit above that (maybe 10% or so). Comcast just upped that to 100/10 (nominal).

Raw testing:

The raw rates (laptop plugged directly into the modem) show 120Mbps down, 10 up, and 120ms of bufferbloat latency. These are both improvements over the previous setup. I'm wondering if the modem is now running PIE... The latency is about half what it was when I set the service up back in May.

And 120Mbps is awfully nice...

But the WNDR3800 was set for slower settings:

Wonderful (lack of) latency, but 50Mbps is a lot less than 120Mbps, so time to start upping the download rate limiting and see what the unit can do:

Unfortunately, the WNDR3800 just doesn't have the processing power to pull off much more than 50Mbps (as I previously knew). Setting the download rate at 100Mbps, it can only manage about 70-75Mbps, and the graphs are noisy enough to show that the unit isn't able to correctly run the algorithms.

Final settings:

CeroWrt Toronto 3.10.36-4

fq_codel

100000 Kbps ingress limit

12000 Kbps egress limit

ECN enabled (both directions)

Addendum: I tried to disable downstream shaping, to see if that would control latency well enough on the upstream side. The results were not confidence-inspiring:

Certainly much better than with no upstream shaping, but the extra latency goes from 5ms to 15ms, and the sharing/fairness of the streams comes apart.

If only Comcast would just fix this in the CMTS and in the modems, instead of forcing us to do this on our own...

Monday, July 7, 2014

NuForce Dia Amplifier: Binding Post Replacement

I have a NuForce Dia that I picked up while we were in France, to drive some bookshelf-sized speakers in the apartment there. It's a nice, small (ok, tiny), amplifier. Class-D, 24Wx2 into 4 ohms. It's not much bigger than the Apple TV that was feeding it.

It sounds great, and easily fills smaller spaces (and not so small spaces), but it's not going to ever be punchy.

Except, it uses spring-clip style binding posts:

As spring-clips go, they're pretty good, but it's not a style of interconnect that I really care much for. Especially when the very nice speaker cables that I have already have banana plugs crimped/soldered onto them.

So, I ordered some new posts from Parts Express, and then went to work.

4 screws front and back, and a thin nut under the volume knob holds everything together. Then the board slides right out.

It looks like NuForce has some rather custom components inside.

Anyway, the big question is how the binding posts are held in:

Which are just screws. But access is blocked by the components on the board, so 4 more screws to remove the PCB from the metal tray, and then we can unscrew the wires from the binding posts.

The new binding posts had tabs, which weren't necessary as the ring terminals on the leads from the PCB just fit.

And then it goes back together the reverse of how it came apart.

Total time? Less than 30 minutes, including the time to stop and take photos as I went.

<30 and="" including="" minutes="" p="" photos.="" stop="" take="" taking="" time="" to="">

And it sounds pretty fantastic with the Maggies. The Magnepan MMGs are maybe a bit bright, and this amp really provides a lot of detail for them to resolve. Overall, I'd say they're a good match. But more listening is required (more listening is always required).

It sounds great, and easily fills smaller spaces (and not so small spaces), but it's not going to ever be punchy.

Except, it uses spring-clip style binding posts:

As spring-clips go, they're pretty good, but it's not a style of interconnect that I really care much for. Especially when the very nice speaker cables that I have already have banana plugs crimped/soldered onto them.

So, I ordered some new posts from Parts Express, and then went to work.

4 screws front and back, and a thin nut under the volume knob holds everything together. Then the board slides right out.

It looks like NuForce has some rather custom components inside.

Anyway, the big question is how the binding posts are held in:

Which are just screws. But access is blocked by the components on the board, so 4 more screws to remove the PCB from the metal tray, and then we can unscrew the wires from the binding posts.

The new binding posts had tabs, which weren't necessary as the ring terminals on the leads from the PCB just fit.

And then it goes back together the reverse of how it came apart.

<30 and="" including="" minutes="" p="" photos.="" stop="" take="" taking="" time="" to="">

And it sounds pretty fantastic with the Maggies. The Magnepan MMGs are maybe a bit bright, and this amp really provides a lot of detail for them to resolve. Overall, I'd say they're a good match. But more listening is required (more listening is always required).

Tuesday, May 27, 2014

Disabling shaping in one direction with CeroWRT

Another test Dave Taht asked for was to disable the downstream shaper and see what happened. It wasn't pretty. So I also disabled the upstream shaper. Also not pretty. Then I went back to using the shaper enabled in both directions.

Baseline using fq_codel and 54.5Mbps downstream limit and 10Mbps upstream limit:

Now, turning off the downstream shaper yields:

This indicates (to me), that there's very excessive buffering at the CMTS, and the downstream shaper is badly needed to keep it under control.

So I re-enabled downstream, and disabled the upstream shaper:

Again, not ideal. It's interesting seeing how the shapers interact with the traffic in the other direction as well. Because their ACKs are going through the other path, disabling shaping on one clearly effects the other.

Baseline using fq_codel and 54.5Mbps downstream limit and 10Mbps upstream limit:

Now, turning off the downstream shaper yields:

This indicates (to me), that there's very excessive buffering at the CMTS, and the downstream shaper is badly needed to keep it under control.

So I re-enabled downstream, and disabled the upstream shaper:

Again, not ideal. It's interesting seeing how the shapers interact with the traffic in the other direction as well. Because their ACKs are going through the other path, disabling shaping on one clearly effects the other.

I don't like this PIE (more bufferbloat testing)

One of the other algorithms for dealing with bufferbloat is PIE. This is Cisco's answer to the problem, and the one standardized on by Cablelabs for inclusion in DOCSIS 3.1. While it's an improvement over the big buffers and a FIFO queue that exists now, I don't think it compares to what fq_codel can do.

Here's the "before", my raw, unmanaged, Comcast "Blast" 50 down, 10Mbps up connection:

Lots of bandwidth, and when loaded, lots of latency. Baseline latency to the test server is ~14ms. Bufferbloat is adding over 250ms of latency. This is why your connection is crap during big downloads.

Now, PIE does make this better. LOTS better:

From 250ms down to 60-80ms.. 1/4 the latency. Not bad, but not great, either. It's still 3-4x the latency that it could have:

fq_codel simply erases all the latency on a connection like this. And this was over a 4 minute sustained test. Able to push over 50Mbps downstream, and over 10Mbps upstream as the numbers in the middle graph are achieved data rates, not including the TCP ACK packets or other overhead.

So, yeah, PIE.

It's ok. But I like fq_codel a LOT better.

Here's the "before", my raw, unmanaged, Comcast "Blast" 50 down, 10Mbps up connection:

Lots of bandwidth, and when loaded, lots of latency. Baseline latency to the test server is ~14ms. Bufferbloat is adding over 250ms of latency. This is why your connection is crap during big downloads.

Now, PIE does make this better. LOTS better:

From 250ms down to 60-80ms.. 1/4 the latency. Not bad, but not great, either. It's still 3-4x the latency that it could have:

fq_codel simply erases all the latency on a connection like this. And this was over a 4 minute sustained test. Able to push over 50Mbps downstream, and over 10Mbps upstream as the numbers in the middle graph are achieved data rates, not including the TCP ACK packets or other overhead.

So, yeah, PIE.

It's ok. But I like fq_codel a LOT better.

Sunday, May 25, 2014

Fixing Bufferbloat on Comcast's "Blast" 50/10Mbps Service

At our new apartment here in the Bay Area, I ended up going with Comcast after realizing that it was about my only option for high speeds.

But I knew it was going to need to love to make it work. It turned out to take very little effort to get pretty fantastic results.

First, latency under full load (upstream and downstream), to a server that's very close:

After a bit, it settles into 350ms of buffer-induced latency. OTOH, this is pretty screaming fast on downlink (58Mbps!), but the uplink is suspicious looking. It gets the advertised 10Mbps for about 10 seconds, and then falls apart.

I put in my WNDR3800 running CeroWRT (a bleeding-edge OpenWRT variant: http://www.bufferbloat.net/projects/cerowrt). Then I set the qos parameters based on the above measurements:

ingress rate limit: 54.5Mbps

egress rate limit: 10Mbps

using simple.qos, and with ecn enabled (both directions).

Results:

I was amazed. Ruler-flat throughput, at the advertised rates, good sharing between the various buckets (first time I've had enough bandwidth to see that actually work), and about 5ms of buffer-induced latency. Unfortunately, the WNDR3800 is at it's CPU limit (load factor of 3-4). OTOH, I don't push full bandwidth all that often (on the downstream side, I do on the upstream when I'm uploading photos).

This is what the Arris modem/router should have done out of the box. Hopefully PIE will help with that in DOCSIS 3.1. Maybe. We'll see.

But I knew it was going to need to love to make it work. It turned out to take very little effort to get pretty fantastic results.

First, latency under full load (upstream and downstream), to a server that's very close:

After a bit, it settles into 350ms of buffer-induced latency. OTOH, this is pretty screaming fast on downlink (58Mbps!), but the uplink is suspicious looking. It gets the advertised 10Mbps for about 10 seconds, and then falls apart.

I put in my WNDR3800 running CeroWRT (a bleeding-edge OpenWRT variant: http://www.bufferbloat.net/projects/cerowrt). Then I set the qos parameters based on the above measurements:

ingress rate limit: 54.5Mbps

egress rate limit: 10Mbps

using simple.qos, and with ecn enabled (both directions).

Results:

I was amazed. Ruler-flat throughput, at the advertised rates, good sharing between the various buckets (first time I've had enough bandwidth to see that actually work), and about 5ms of buffer-induced latency. Unfortunately, the WNDR3800 is at it's CPU limit (load factor of 3-4). OTOH, I don't push full bandwidth all that often (on the downstream side, I do on the upstream when I'm uploading photos).

This is what the Arris modem/router should have done out of the box. Hopefully PIE will help with that in DOCSIS 3.1. Maybe. We'll see.

Sunday, May 4, 2014

Measured bufferbloat on Orange.fr DSL (Villefranche-sur-Mer)

Yes, I'm doing bufferbloat experiments on a beach vacation. The rest of the family is napping after time at the beach, and the light isn't good enough yet for photography, so I might as well.

Summary:

- lots of bloat (60-100ms or more of additional latency when loaded)

- but traffic classification actually works

I've setup a new test in netperf wrapper that's a bit gentler, specifically for low-bandwidth DSL setups (like I've had for the last year). Instead of the 4 streams up, down, and 4 UDP streams with ICMP, it's just 2 in each direction (dropping CS5 and EF streams from TCP, and BK and BE from UDP).

In the 2-stream test (rrul_lowbw), the EF UDP shows a clear front-of-the-queue advantage over ICMP, which was surprising to see.

Then the full 4 stream rrul test really, really added latency:

But, interestingly, the EF-marked UDP packets were clearly getting some sort of priority treatment.

==========

Qualitative thoughts. It's bursty/chunky. Throughput isn't bad, when it gets around to it, but it likes to stall and then burp back a lot of data. When it's not stalled out, it feels pretty fast, but then everything halts for a few seconds, and then runs again.

I don't think that the DSL modem/router's local DNS server is caching . I'm seeing 50ms lookups for addresses (the second time and follow-on times), and 100+ms for the first. Google DNS (8.8.8.8) is ~40ms away, which is why I think that the local DNS server isn't caching, just forwarding.

===========

I dug my WNDR3800 running CeroWRT out of my luggage (long story), and set it up behind the Livebox from Orange. I tuned it for 12000 down and 1000 up, and got the following results with 2 streams and with 4:

Latency is far, far better. And the TCP streams seem to be smoother. It feels a bit snappier, and no slower, even though it's testing out at about 10% slower than when going direct to the Livebox.

===========

Interestingly, while this is distinctly slower than the Free.fr service I had in Paris, this feels subjectively faster. Google servers are about the same distance away (40ms), but it's much snappier, and uploads in particular are performing better, even though they're testing worse... When loaded, the DSL upload really doesn't handle multitasking very well.

Also, this is the first time I've actually seen the diffserv classes make a difference, and a clear difference at that. I'm not sure why I wasn't seeing this with Free.fr, but here with the Orange Livebox, it's clearly working.

Possibly, as I'm only here for a week, I didn't care about maximizing throughput, and I'm imposing a larger bottleneck than I was with Free.fr?

===========

Next test (in a week or two): Comcast 50Mbps cable.

Summary:

- lots of bloat (60-100ms or more of additional latency when loaded)

- but traffic classification actually works

I've setup a new test in netperf wrapper that's a bit gentler, specifically for low-bandwidth DSL setups (like I've had for the last year). Instead of the 4 streams up, down, and 4 UDP streams with ICMP, it's just 2 in each direction (dropping CS5 and EF streams from TCP, and BK and BE from UDP).

In the 2-stream test (rrul_lowbw), the EF UDP shows a clear front-of-the-queue advantage over ICMP, which was surprising to see.

Then the full 4 stream rrul test really, really added latency:

But, interestingly, the EF-marked UDP packets were clearly getting some sort of priority treatment.

==========

Qualitative thoughts. It's bursty/chunky. Throughput isn't bad, when it gets around to it, but it likes to stall and then burp back a lot of data. When it's not stalled out, it feels pretty fast, but then everything halts for a few seconds, and then runs again.

I don't think that the DSL modem/router's local DNS server is caching . I'm seeing 50ms lookups for addresses (the second time and follow-on times), and 100+ms for the first. Google DNS (8.8.8.8) is ~40ms away, which is why I think that the local DNS server isn't caching, just forwarding.

===========

I dug my WNDR3800 running CeroWRT out of my luggage (long story), and set it up behind the Livebox from Orange. I tuned it for 12000 down and 1000 up, and got the following results with 2 streams and with 4:

Latency is far, far better. And the TCP streams seem to be smoother. It feels a bit snappier, and no slower, even though it's testing out at about 10% slower than when going direct to the Livebox.

===========

Interestingly, while this is distinctly slower than the Free.fr service I had in Paris, this feels subjectively faster. Google servers are about the same distance away (40ms), but it's much snappier, and uploads in particular are performing better, even though they're testing worse... When loaded, the DSL upload really doesn't handle multitasking very well.

Also, this is the first time I've actually seen the diffserv classes make a difference, and a clear difference at that. I'm not sure why I wasn't seeing this with Free.fr, but here with the Orange Livebox, it's clearly working.

Possibly, as I'm only here for a week, I didn't care about maximizing throughput, and I'm imposing a larger bottleneck than I was with Free.fr?

===========

Next test (in a week or two): Comcast 50Mbps cable.

Subscribe to:

Posts (Atom)